Breaking Down Complex AI Tasks

My Take on Agentic Fine-Tuning

I've been experimenting with domain-specific fine-tuning for a while, and I keep running into the same issue: the traditional approach of fine-tuning large language models on entire datasets is inefficient and often suboptimal. Here's how I'm solving this with what I call "Agentic Fine-Tuning."

The Problem I Was Trying to Solve

So here's the thing - we've all been there. You've got this large language model, and you want it to do something specific, like converting natural language to SQL queries. The traditional approach? Throw a bunch of data at it and fine-tune the entire model. But let's be real, that's kind of like using a sledgehammer to crack a nut.

My Approach: Breaking It Down

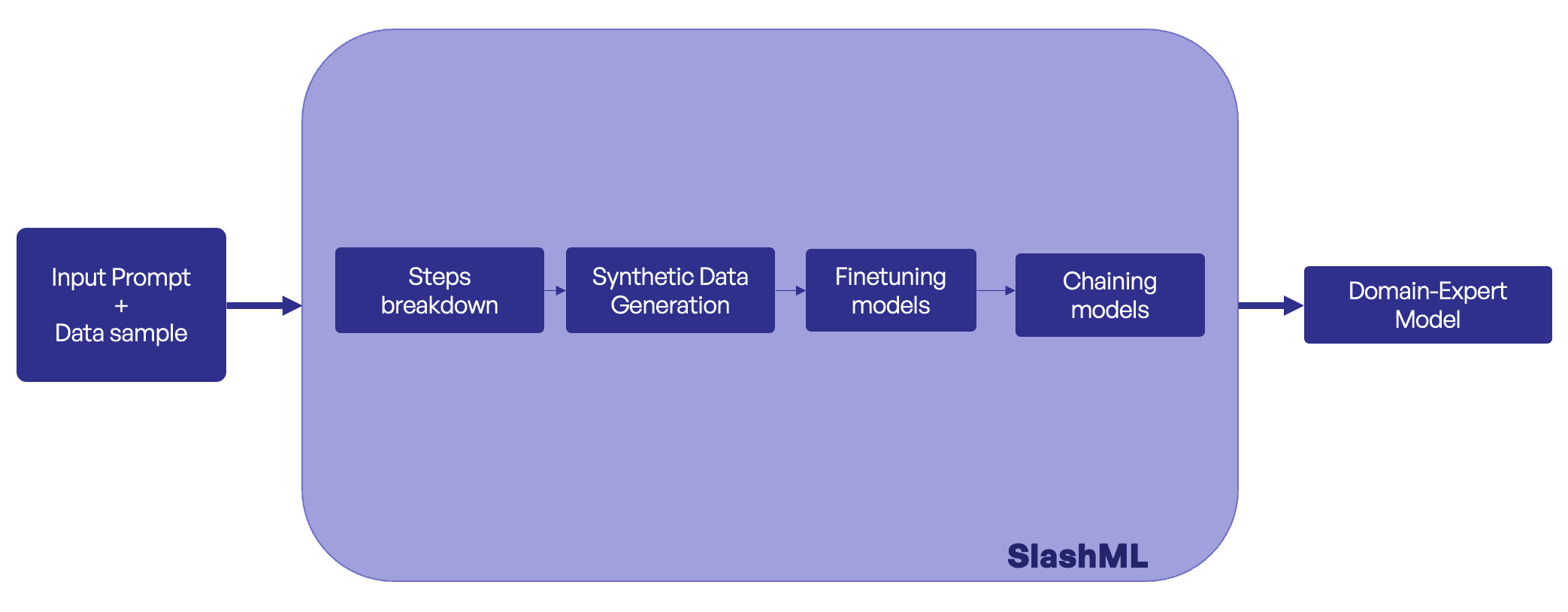

Instead of treating these tasks as one big chunk, I started thinking: "What if we break this down into smaller, more manageable pieces?" Here's what I came up with:

First, we look at what we're trying to do and break it into logical steps

For each step, we generate specific training data (this is where it gets interesting)

We train smaller, specialized models for each step

Finally, we chain these models together

Let's Get Real: A Quick Example

Take my text-to-SQL project. Instead of one model trying to do everything, I broke it down like this:

Each model has one job, and it does it really well.

Why This Actually Works

Here's what I've found while building this:

The models are way more accurate because they're focused on one thing

When something goes wrong, it's super easy to figure out where

You can update one part without touching the others

It's actually more efficient than training one massive model

The Cool Part: Synthetic Data Generation

One of the most interesting bits is how we generate training data. For each step, we create specific examples that focus on that particular task. It's like having a specialized teacher for each part of the process.

Zero Infrastructure: The Game Changer

Here's something most people don't talk about: deploying fine-tuned models is equally as hard as training them. You need GPUs, cloud configs, security setups... it's a mess. But what if you didn't have to worry about any of that?

Our platform will automagically handle everything, IN THE PRIVATE CLOUD!

It's like having a DevOps team in your pocket. Upload your data, define your task, and get a production endpoint in hours, not weeks.

Building This Yourself

We are putting together the platform that helps you implement this approach end-to-end. It guides you through:

Breaking down your task

Generating synthetic data for each step

Training specialized models

Chaining everything together

Stay tuned :)

Deploy any model In Your Private Cloud or SlashML Cloud

READ OTHER POSTS