Published on June 6, 2024

Deploying LLaMA 3 on AWS

Deploying LLaMA 3 on AWS

Deploying LLaMA 3 on AWS

Faizan Khan

LLaMA 3 is a line of open-source models released by Meta. The latest version of which is LLaMA 3. Meta has plans to incorporate LLaMA 3 into most of its social media applications. Meta has released two versions of LLaMa 3, one with 8B parameters, and one with 70B parameters. [1] [2]

The 70B version of LLaMA 3 has been trained on a custom-built 24k GPU cluster on over 15T tokens of data, which is roughly 7x larger than that used for LLaMA 2. [2] [3]

LLaMA 3 is a line of open-source models released by Meta. The latest version of which is LLaMA 3. Meta has plans to incorporate LLaMA 3 into most of its social media applications. Meta has released two versions of LLaMa 3, one with 8B parameters, and one with 70B parameters. [1] [2]

The 70B version of LLaMA 3 has been trained on a custom-built 24k GPU cluster on over 15T tokens of data, which is roughly 7x larger than that used for LLaMA 2. [2] [3]

LLaMA 3 is a line of open-source models released by Meta. The latest version of which is LLaMA 3. Meta has plans to incorporate LLaMA 3 into most of its social media applications. Meta has released two versions of LLaMa 3, one with 8B parameters, and one with 70B parameters. [1] [2]

The 70B version of LLaMA 3 has been trained on a custom-built 24k GPU cluster on over 15T tokens of data, which is roughly 7x larger than that used for LLaMA 2. [2] [3]

Where to get LLaMA3

Where to get LLaMA3

Where to get LLaMA3

LLaMA 3 can be downloaded for free from Meta’s website and pulled in from hugging-face. It is offered in two variants: pre-trained, which is a basic model for next token prediction, and instruction-tuned, which is fine-tuned to adhere to user commands. It can be downloaded for free from Meta's website in two different parameter sizes: 8 billion (8B) and 70 billion (70B). Users can sign up to access these versions. [2]

You can also get LLaMA-3 from hugging-face, which is what we are going to do.

LLaMA 3 can be downloaded for free from Meta’s website and pulled in from hugging-face. It is offered in two variants: pre-trained, which is a basic model for next token prediction, and instruction-tuned, which is fine-tuned to adhere to user commands. It can be downloaded for free from Meta's website in two different parameter sizes: 8 billion (8B) and 70 billion (70B). Users can sign up to access these versions. [2]

You can also get LLaMA-3 from hugging-face, which is what we are going to do.

LLaMA 3 can be downloaded for free from Meta’s website and pulled in from hugging-face. It is offered in two variants: pre-trained, which is a basic model for next token prediction, and instruction-tuned, which is fine-tuned to adhere to user commands. It can be downloaded for free from Meta's website in two different parameter sizes: 8 billion (8B) and 70 billion (70B). Users can sign up to access these versions. [2]

You can also get LLaMA-3 from hugging-face, which is what we are going to do.

How good is it

How good is it

How good is it

According to famous leaderboards like LMSYS and hugging-face, the LLaMA-3 8B outperforms GPT-3.5-turbo and the LLaMA-3 80B outperforms GPT-4 base model as of today. Similarly among the open-source models the LLaMA3 8B outperforms most famous open-source models like Google's Gemma 7B and Mistral 7B Instruct.

According to famous leaderboards like LMSYS and hugging-face, the LLaMA-3 8B outperforms GPT-3.5-turbo and the LLaMA-3 80B outperforms GPT-4 base model as of today. Similarly among the open-source models the LLaMA3 8B outperforms most famous open-source models like Google's Gemma 7B and Mistral 7B Instruct.

According to famous leaderboards like LMSYS and hugging-face, the LLaMA-3 8B outperforms GPT-3.5-turbo and the LLaMA-3 80B outperforms GPT-4 base model as of today. Similarly among the open-source models the LLaMA3 8B outperforms most famous open-source models like Google's Gemma 7B and Mistral 7B Instruct.

Hardware Requirements

Hardware Requirements

Hardware Requirements

In order to deploy any model, we first need to determine the compute requirements. As a basic rule of thumb, the size of the parameter is the space it needs on the disk. However, in order to load it in memory, there is usually an overhead, so roughly a 1.5x-4x the size it takes on the disk. [4]

Since LLaMA3-8B has 8 billion params. It roughly requires 16GB storage, and around 24GB RAM. Now the RAM could be GPU or a CPU, with the GPU resulting in faster inference. We are going to use the GPU. Similarly, LLaMA3-70B requires around 140GB of storage and roughly 160GB of RAM.

In order to deploy any model, we first need to determine the compute requirements. As a basic rule of thumb, the size of the parameter is the space it needs on the disk. However, in order to load it in memory, there is usually an overhead, so roughly a 1.5x-4x the size it takes on the disk. [4]

Since LLaMA3-8B has 8 billion params. It roughly requires 16GB storage, and around 24GB RAM. Now the RAM could be GPU or a CPU, with the GPU resulting in faster inference. We are going to use the GPU. Similarly, LLaMA3-70B requires around 140GB of storage and roughly 160GB of RAM.

In order to deploy any model, we first need to determine the compute requirements. As a basic rule of thumb, the size of the parameter is the space it needs on the disk. However, in order to load it in memory, there is usually an overhead, so roughly a 1.5x-4x the size it takes on the disk. [4]

Since LLaMA3-8B has 8 billion params. It roughly requires 16GB storage, and around 24GB RAM. Now the RAM could be GPU or a CPU, with the GPU resulting in faster inference. We are going to use the GPU. Similarly, LLaMA3-70B requires around 140GB of storage and roughly 160GB of RAM.

Choosing the Right Instance on AWS

Choosing the Right Instance on AWS

Choosing the Right Instance on AWS

Ok so we need roughly 24GB of RAM and 16 GB of disk space for LLaMA-3-8B. We want to go for instances that are optimized for compute with a single GPU, preferably one with the latest version. For the purpose of this tutorial, we will go with the g5.xlarge. Its has Nvidia A10 GPU, which gives better performance than the T-4 instances, and cheaper than the ones with the A100 GPUs. [5]

Ok so we need roughly 24GB of RAM and 16 GB of disk space for LLaMA-3-8B. We want to go for instances that are optimized for compute with a single GPU, preferably one with the latest version. For the purpose of this tutorial, we will go with the g5.xlarge. Its has Nvidia A10 GPU, which gives better performance than the T-4 instances, and cheaper than the ones with the A100 GPUs. [5]

Ok so we need roughly 24GB of RAM and 16 GB of disk space for LLaMA-3-8B. We want to go for instances that are optimized for compute with a single GPU, preferably one with the latest version. For the purpose of this tutorial, we will go with the g5.xlarge. Its has Nvidia A10 GPU, which gives better performance than the T-4 instances, and cheaper than the ones with the A100 GPUs. [5]

Cost and Pricing

Cost and Pricing

Cost and Pricing

All EC2 instances have on-demand pricing, unless they are reserved. This means that you are charged for the amount of time it's running. You will not be charged if you stop the instance. The price for a g5.xlarge in US-east-1 is roughly 1$/hr. This amounts to a total of 732$ per month, if its always on. You will save money if the server spins down after inactivity. You can attach certain triggers to handle that but it is beyond the scope of this article. [6]

All EC2 instances have on-demand pricing, unless they are reserved. This means that you are charged for the amount of time it's running. You will not be charged if you stop the instance. The price for a g5.xlarge in US-east-1 is roughly 1$/hr. This amounts to a total of 732$ per month, if its always on. You will save money if the server spins down after inactivity. You can attach certain triggers to handle that but it is beyond the scope of this article. [6]

All EC2 instances have on-demand pricing, unless they are reserved. This means that you are charged for the amount of time it's running. You will not be charged if you stop the instance. The price for a g5.xlarge in US-east-1 is roughly 1$/hr. This amounts to a total of 732$ per month, if its always on. You will save money if the server spins down after inactivity. You can attach certain triggers to handle that but it is beyond the scope of this article. [6]

Launching the relevant Instance

Launching the relevant Instance

Launching the relevant Instance

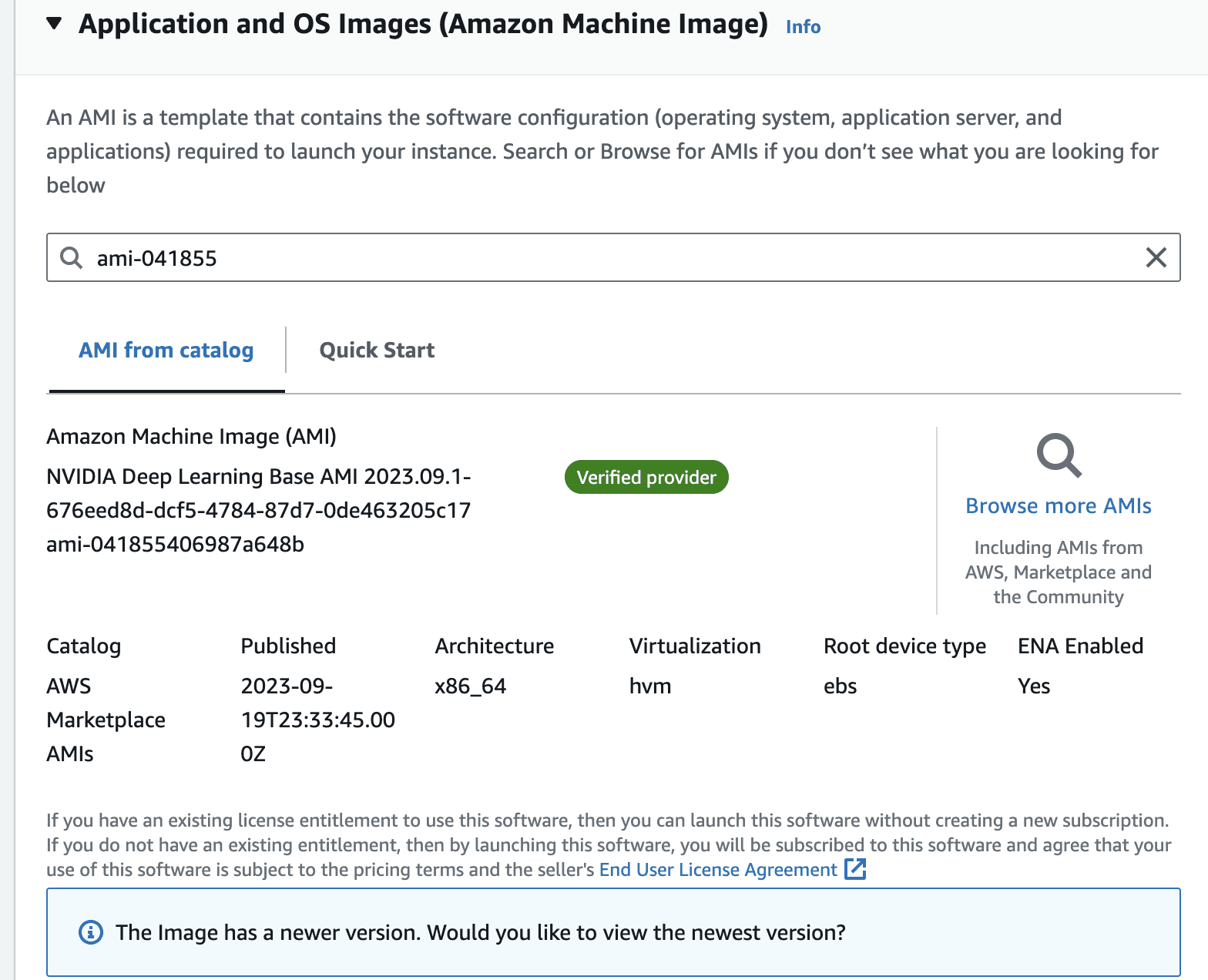

Go to the launch instance portal in the relevant zone. In the application and OS images section type ami-041855, click enter. This is the id of the base image we will use to spin up our instance.

Go to the launch instance portal in the relevant zone. In the application and OS images section type ami-041855, click enter. This is the id of the base image we will use to spin up our instance.

Go to the launch instance portal in the relevant zone. In the application and OS images section type ami-041855, click enter. This is the id of the base image we will use to spin up our instance.

This will take you to the AMI search portal. Go to Community AMIs tab , and select the Nvidia Deep Learning Base AMI.

This will take you to the AMI search portal. Go to Community AMIs tab , and select the Nvidia Deep Learning Base AMI.

This will take you to the AMI search portal. Go to Community AMIs tab , and select the Nvidia Deep Learning Base AMI.

You can technically start with any other base image, but this AMI provided by Amazon, has all of the dependencies pre-installed. You can read more about this image in their release notes.

Once you select the image, the view should look like the following.

You can technically start with any other base image, but this AMI provided by Amazon, has all of the dependencies pre-installed. You can read more about this image in their release notes.

Once you select the image, the view should look like the following.

You can technically start with any other base image, but this AMI provided by Amazon, has all of the dependencies pre-installed. You can read more about this image in their release notes.

Once you select the image, the view should look like the following.

In the instance type, panel, select g5.xlarge form the dropdown.

In the instance type, panel, select g5.xlarge form the dropdown.

In the instance type, panel, select g5.xlarge form the dropdown.

Make sure you attach an ssh key-pair to the instance.

Make sure you attach an ssh key-pair to the instance.

Make sure you attach an ssh key-pair to the instance.

Leave the default network settings, i.e. only allow ssh Traffic for now. We will modify this in a bit.

Leave the default network settings, i.e. only allow ssh Traffic for now. We will modify this in a bit.

Leave the default network settings, i.e. only allow ssh Traffic for now. We will modify this in a bit.

In the configure storage, attach an external volume. Leave the default setting of 128GB, this is mostly if you want to persist some data between reboots. The default storage on the instance is ephemeral, which means that data can get lost if the instance is restarted.

In the configure storage, attach an external volume. Leave the default setting of 128GB, this is mostly if you want to persist some data between reboots. The default storage on the instance is ephemeral, which means that data can get lost if the instance is restarted.

In the configure storage, attach an external volume. Leave the default setting of 128GB, this is mostly if you want to persist some data between reboots. The default storage on the instance is ephemeral, which means that data can get lost if the instance is restarted.

The summary of the instance should look like the following, click on launch instance

The summary of the instance should look like the following, click on launch instance

The summary of the instance should look like the following, click on launch instance

Finally, once the instance is ready, modify the security group to allow incoming requests at port 8000. This is because our inference server will be running on port 8000.

Finally, once the instance is ready, modify the security group to allow incoming requests at port 8000. This is because our inference server will be running on port 8000.

Finally, once the instance is ready, modify the security group to allow incoming requests at port 8000. This is because our inference server will be running on port 8000.

Preparing the Instance

Preparing the Instance

Preparing the Instance

We are going to use vLLM to serve the language model on our server. vLLM is a python package designed for fast inference of open-source language models. It optimizes the inference by using multiple methods such as batching the incoming queries, and caching the relevant requests. [7]

Once the instance is running. Establish an SSH connection to the instance from your terminal. You can do that by using the following command

We are going to use vLLM to serve the language model on our server. vLLM is a python package designed for fast inference of open-source language models. It optimizes the inference by using multiple methods such as batching the incoming queries, and caching the relevant requests. [7]

Once the instance is running. Establish an SSH connection to the instance from your terminal. You can do that by using the following command

We are going to use vLLM to serve the language model on our server. vLLM is a python package designed for fast inference of open-source language models. It optimizes the inference by using multiple methods such as batching the incoming queries, and caching the relevant requests. [7]

Once the instance is running. Establish an SSH connection to the instance from your terminal. You can do that by using the following command

ssh -i path_to_your_key.pem ubuntu@instance_public_ip

Once inside the instance, create a virtual env, and install vllm with the following command. This will take a few minutes

Once the instance is running. Establish an SSH connection to the instance from your terminal. You can do that by using the following command.

Once inside the instance, create a virtual env, and install vllm with the following command. This will take a few minutes

Once the instance is running. Establish an SSH connection to the instance from your terminal. You can do that by using the following command.

Once inside the instance, create a virtual env, and install vllm with the following command. This will take a few minutes

Once the instance is running. Establish an SSH connection to the instance from your terminal. You can do that by using the following command.

pip install vllm

In case you encounter compatibility problems while installing, it may be simpler for you to compile vLLM from the source or use their Docker image: have a look at the vLLM installation instructions.

In case you encounter compatibility problems while installing, it may be simpler for you to compile vLLM from the source or use their Docker image: have a look at the vLLM installation instructions.

In case you encounter compatibility problems while installing, it may be simpler for you to compile vLLM from the source or use their Docker image: have a look at the vLLM installation instructions.

Launch the Inference Server

Launch the Inference Server

Launch the Inference Server

Once the download is completed, you can simply run an OpenAI compatible inference server by running the following command. The name of the model comes from the relevant hugging-face name [8] . This takes a few mins to run the server fully

Once the download is completed, you can simply run an OpenAI compatible inference server by running the following command. The name of the model comes from the relevant hugging-face name [8] . This takes a few mins to run the server fully

Once the download is completed, you can simply run an OpenAI compatible inference server by running the following command. The name of the model comes from the relevant hugging-face name [8] . This takes a few mins to run the server fully

pip install vllm

Running the above command for the first time will return a 401, because it requires access to your hugging-face account. In order to grant access, run the following command in the terminal.

Running the above command for the first time will return a 401, because it requires access to your hugging-face account. In order to grant access, run the following command in the terminal.

Running the above command for the first time will return a 401, because it requires access to your hugging-face account. In order to grant access, run the following command in the terminal.

huggingface-cli login

This will ask for a token, which you can generate by going to the hugging-face dashboard. You might also need access to the model from Meta. You can apply for it by going to the relevant model and submitting a request. In our case the model that we are interested in is meta-llama/Meta-Llama-3-8B. https://huggingface.co/meta-llama/Meta-Llama-3-8B

This will ask for a token, which you can generate by going to the hugging-face dashboard. You might also need access to the model from Meta. You can apply for it by going to the relevant model and submitting a request. In our case the model that we are interested in is meta-llama/Meta-Llama-3-8B. https://huggingface.co/meta-llama/Meta-Llama-3-8B

This will ask for a token, which you can generate by going to the hugging-face dashboard. You might also need access to the model from Meta. You can apply for it by going to the relevant model and submitting a request. In our case the model that we are interested in is meta-llama/Meta-Llama-3-8B. https://huggingface.co/meta-llama/Meta-Llama-3-8B

Once the server is running, you will see something like the following

Once the server is running, you will see something like the following

Once the server is running, you will see something like the following

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

This means that our server is running on port 8000, and you can call it from your computer by running the following curl command.

This means that our server is running on port 8000, and you can call it from your computer by running the following curl command.

This means that our server is running on port 8000, and you can call it from your computer by running the following curl command.

curl http://server_public_ip_address:8000/v1/completions \

-H "Content-Type: application/json" \

-d '{

"model": "meta-llama/Meta-Llama-3-8B-Instruct",

"prompt": "What are the most popular quantization techniques for LLMs?"

}'

You now have a production ready inference server that can handle many parallel requests, thanks to vLLMs continuous batching. However, it can only serve so much, if the number of requests increases, then you might have to think about horizontal scaling i.e. spinning up other instances and load balancing the requests among them.

You now have a production ready inference server that can handle many parallel requests, thanks to vLLMs continuous batching. However, it can only serve so much, if the number of requests increases, then you might have to think about horizontal scaling i.e. spinning up other instances and load balancing the requests among them.

You now have a production ready inference server that can handle many parallel requests, thanks to vLLMs continuous batching. However, it can only serve so much, if the number of requests increases, then you might have to think about horizontal scaling i.e. spinning up other instances and load balancing the requests among them.

Similarly, you might want to add authentication, logging and autoscaling to this instance. For this you can utilize AWS resources, such as cognito, AWS lambda, and cloudwatch. If you don’t want to do all of that yourself, you can check out https://slashml.com, which handles all of these things for you.

Similarly, you might want to add authentication, logging and autoscaling to this instance. For this you can utilize AWS resources, such as cognito, AWS lambda, and cloudwatch. If you don’t want to do all of that yourself, you can check out https://slashml.com, which handles all of these things for you.

Similarly, you might want to add authentication, logging and autoscaling to this instance. For this you can utilize AWS resources, such as cognito, AWS lambda, and cloudwatch. If you don’t want to do all of that yourself, you can check out https://slashml.com, which handles all of these things for you.

If you have questions about LLaMA 3 and AI deployment in general, please don't hesitate to email faizank@slashml.com, it's always a pleasure to help!

If you have questions about LLaMA 3 and AI deployment in general, please don't hesitate to email faizank@slashml.com, it's always a pleasure to help!

If you have questions about LLaMA 3 and AI deployment in general, please don't hesitate to email faizank@slashml.com, it's always a pleasure to help!

References

References

References

See all posts